In this hands-on article, I will show you how to implement semantic search with CohereAI and Pinecone Python library.

Table of Contents

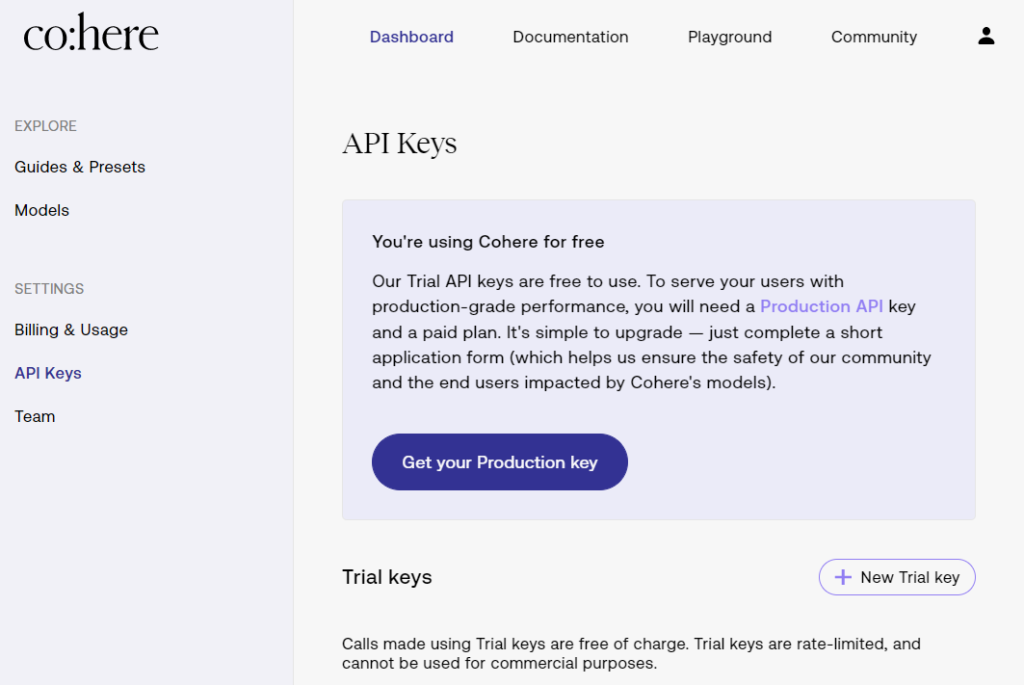

Get your CohereAI API key

https://cohere.ai/

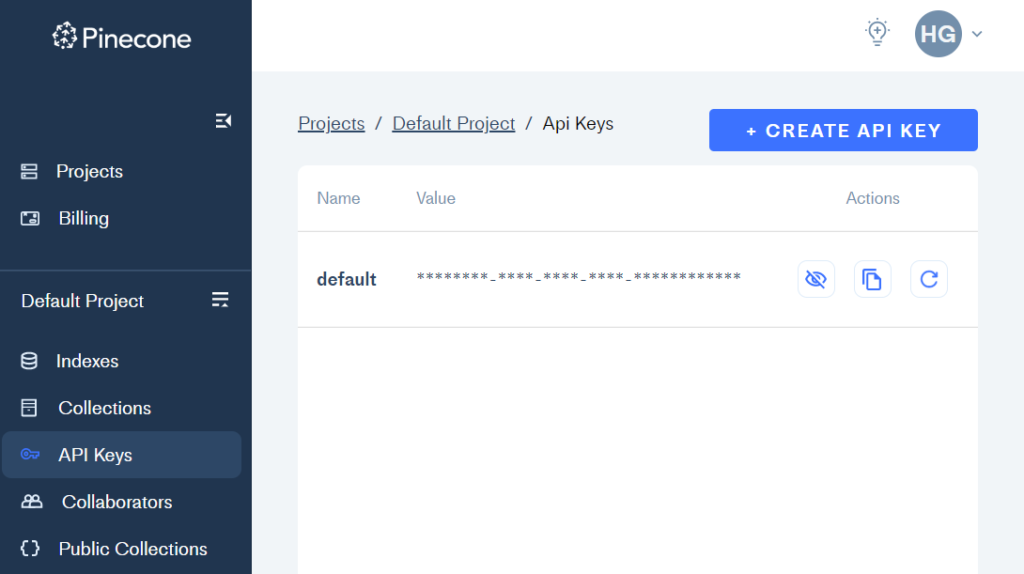

Get your Pinecone API key

https://www.pinecone.io/

Prepare your data

The data you want to use will depend on your use case. Or you can use the sample in this example for now:

list_of_travel_ideas = [ "Hiking trails in the Rocky Mountains", "Popular beaches in California", "Museums in Paris", "Ski resorts in Colorado", "National parks in Australia", "Historical landmarks in Rome", "Famous landmarks in New York City", "Breweries in Portland", "Golf courses in Scotland", "Wineries in Napa Valley", "Horseback riding trails in the Appalachian Mountains", "Diving spots in the Great Barrier Reef", "Art galleries in London", "Biking trails in the Netherlands", "Sightseeing tours in Japan", "Amusement parks in Florida", "Zoos in South Africa", "National forests in the Pacific Northwest", "Ski resorts in the Swiss Alps", "Hiking trails in the Pyrenees", "Famous landmarks in Istanbul", "Museums in Berlin", "Beaches in Thailand", "Historical landmarks in Cairo", "Nature reserves in Costa Rica", "Ski resorts in the Canadian Rockies"]list_of_ids = ids = [ “A1B2C3”, “D4E5F6”, “G7H8I9”, “J10K11”, “L12M13N”, “O14P15Q”, “R16S17T”, “U18V19W”, “X20Y21Z”, “A22B23C”, “D24E25F”, “G26H27I”, “J28K29L”, “M30N31O”, “P32Q33R”, “S34T35U”, “V36W37X”, “Y38Z39A”, “B40C41D”, “E42F43G”]

I generated the above data with chatGPT.

Next, create a pandas dataframe with it

import pandas as pd

my_data = pd.DataFrame(list(list_of_travel_ideas,list_of_ids)), columns = ['ID', 'Activity']

Create Embeddings using Cohere

The below code uses CohereAI’s API to create embeddings from the above data. Make sure to replace ‘your_cohere_api-key’ with your own cohere API key.

import cohere

co = cohere.Client('your_cohere_api_key')

response = co.embed(

model='large',

texts=my_data["Activity"].tolist()).embeddings

import numpy as np

shape = np.array(embeds).shape

Import embeddings into Pinecone

Now we will store the embeddings in a pinecone vector database

# First we import pinecone. If you have not installed it, install it first by running pip install pinecone

import pinecone

# Initialize pinecone

pinecone.init('your_pinecone_api_key', environment='us-west1-gcp')

# create the pinecone index

index_name = 'travel-acitivity'

pinecone.create_index(

index_name,

dimensions=shape[1],

metric='cosine'

)

# connect to the newly created index

index = pinecone.Index(index_name)

# upsert the embeddings into pinecone index

ids = [str(i) for i in range(shape[0])]

# create list of metadata dictionaries

meta = [{'text': text} for text in trec['text']]

# create list of (id, vector, metadata) tuples to be upserted

to_upsert = list(zip(ids, embeds, meta))

for i in range(0, shape[0], batch_size):

i_end = min(i+batch_size, shape[0])

index.upsert(vectors=to_upsert[i:i_end])

Search

Now we are ready to perform semantic search queries.

query = 'fun activity in new york'

xq = co.embed(

texts=[query],

model='large',

truncate='LEFT'

).embeddings

print(index.query(xq, top_k=5, include_metadata=True)

The above will return top 5 most similar results.

Summary

You can extend this method by building web-based applications that use this method to provide semantic search inside books, podcasts, documentation, etc.

References