BLOOM is a GPT-3 like Open Source AI model capable of generating content in 59 languages.

BLOOM AI model was created by an Open Source Research group called Big Science. It has 176 Billion parameters. In most cases, the more parameters an AI model has, the more capable it is.

Table of Contents

BLOOM AI model is Open Source and Free

When you use OpenAI’s GPT-3, you pay OpenAI for every usage. However, BLOOM is not like that. You can download and use BLOOM yourself and use it for free. Since it’s Open Source, you can modify it too.

Using BLOOM for AI Content generation

There are multiple ways to use BLOOM. Let’s check out one of the easiest ways – using Google Colab. The beauty of Google Colab is you do not need to spend time installing anything on your computer or wrestle with various libraries. Plus its free tier is pretty generous although you could get more performance with one of the paid plans. However, that is not necessary for this tutorial.

1. log in to Google Colab

Login to your [Google Colab] account. If you don’t have one, you can create one from the same link.

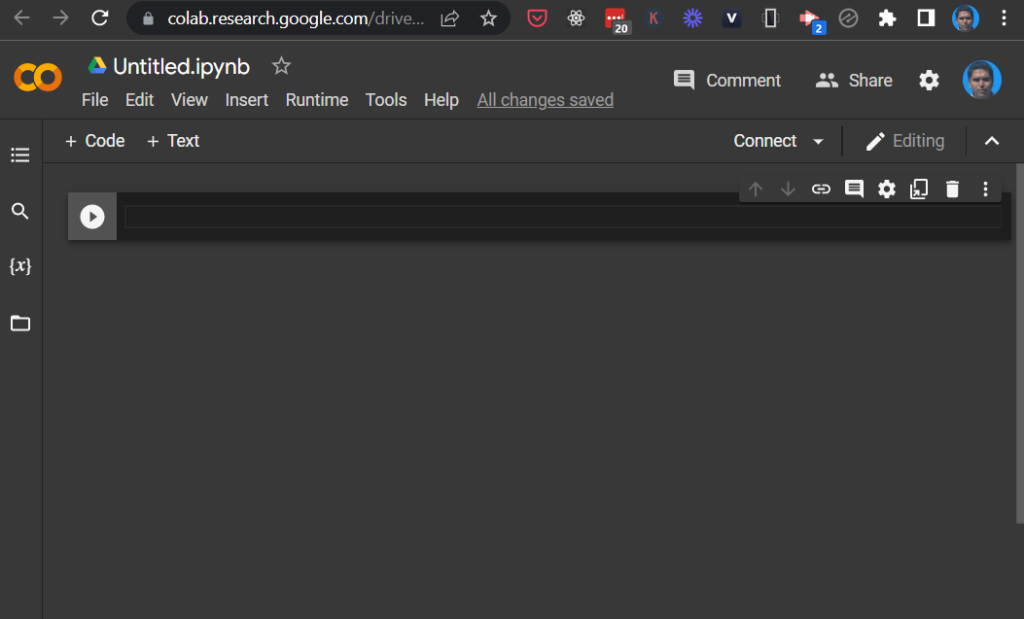

2. Create a new Colab Notebook

Click on File Menu -> New Notebook to create a new notebook.

3. Install the transformers library in Google Colab

Write the below code in the first cell of the Colab Notebook from above and press on the run button on it’s left side to run the cell.

! pip install transformers -q

4. Write and run the code to generate content

from transformers import AutoModelForCausalLM, AutoTokenizer, set_seedimport torch torch.set_default_tensor_type(torch.cuda.FloatTensor)model = AutoModelForCausalLM.from_pretrained("bigscience/bloom-1b3", use_cache=True) tokenizer = AutoTokenizer.from_pretrained("bigscience/bloom-1b3")set_seed(424242)prompt = f'This is an article on space travel 'input_ids = tokenizer(prompt, return_tensors="pt").to(0)sample = model.generate(**input_ids, max_length=200, top_k=1, temperature=0.9, repetition_penalty = 2.0)

tokenizer.decode(sample[0], truncate_before_pattern=[r“\n\n^#”, “^”’”, “\n\n\n“])

This will generate content related to the prompt. You can change the prompt and re-run the last two or four lines to generate different content.